Systems Engineering and Electronics ›› 2021, Vol. 43 ›› Issue (4): 1036-1043.doi: 10.12305/j.issn.1001-506X.2021.04.21

• Guidance, Navigation and Control • Previous Articles Next Articles

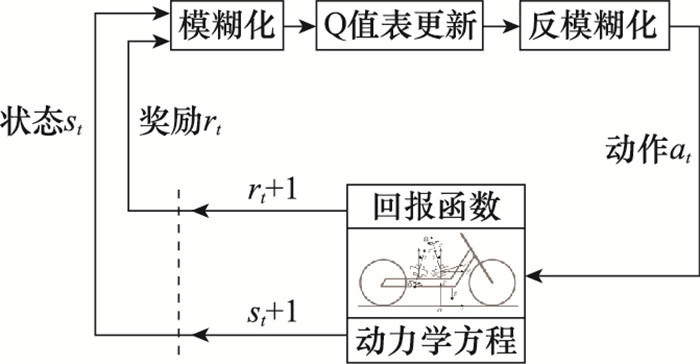

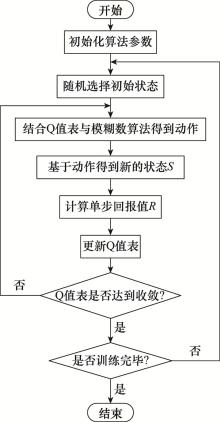

Attitude balance control of two-wheeled robot based on fuzzy reinforcement learning

An YAN1( ), Zhang CHEN2,*(

), Zhang CHEN2,*( ), Chaoyang DONG1(

), Chaoyang DONG1( ), Kanghui HE1(

), Kanghui HE1( )

)

- 1. School of Aeronautic Science and Engineering, Beihang University, Beijing 100191, China

2. Department of Automation, Tsinghua University, Beijing 100084, China

-

Received:2020-06-13Online:2021-03-25Published:2021-03-31 -

Contact:Zhang CHEN E-mail:yanan801@buaa.edu.cn;cz_da@tsinghua.edu.cn;dongchaoyang@buaa.edu.cn;502711921@qq.com

CLC Number:

Cite this article

An YAN, Zhang CHEN, Chaoyang DONG, Kanghui HE. Attitude balance control of two-wheeled robot based on fuzzy reinforcement learning[J]. Systems Engineering and Electronics, 2021, 43(4): 1036-1043.

share this article

Table 4

Parameters of the robot model"

| 参数 | 符号 | 取值 |

| 飞轮质量/kg | mf | 15.4 |

| 飞轮X轴转动惯量/(kg·m2) | Ifx | 0.045 7 |

| 飞轮Y轴转动惯量/(kg·m2) | Ify | 0.045 7 |

| 飞轮Z轴转动惯量/(kg·m2) | Ifz | 0.085 |

| 陀螺框架主轴惯量/(kg·m2) | — | 0 |

| 车身主轴惯量/(kg·m2) | Iby | 14.56 |

| 车身质心高度/m | hb | 0.35 |

| 飞轮质心高度/m | hf | 0.3 |

| 陀螺框架质心高度/m | hg | 0 |

| 车身质量/kg | mb | 89.2 |

| 飞轮自转角速度/rpm | Ω | 4 000 |

| 陀螺框架质量/kg | mg | 0 |

| 重力加速度/(N/kg) | g | 9.8 |

| 1 | MENG J, LIU A B, YANG Y Q, et al. Two-wheeled robot platform based on pid control[C]//Proc. of the International Conference on Information Science and Control Engineering, 2018: 1011-1014. |

| 2 |

WARDANA A A , TAKAKI T , AOYAMA T , et al. Dynamic modeling and step-climbing analysis of a two-wheeled stair-climbing inverted pendulum robot[J]. Advanced Robotics, 2020, 34 (5): 313- 327.

doi: 10.1080/01691864.2019.1704868 |

| 3 | UDDIN N , TEGUH A N , WAHYU A P . Passivity-based control for two-wheeled robot stabilization[J]. Journal of Physics: Conference Series, 2018, 1007 (1): 1- 6. |

| 4 | 宁一高, 岳明, 许媛, 等. 基于IMU/UWB的两轮自平衡车轨迹跟踪控制器设计与实现[J]. 控制与决策, 2019, 34 (12): 2635- 2641. |

| NING Y G , YUE M , XU Y , et al. Design and implementation of tra-jectory tracking controller for two-wheel self-balancing vehicle based on IMU/UWB[J]. Control and Decision, 2019, 34 (12): 2635- 2641. | |

| 5 | ZHANG Y Z, WANG P C, YI J G, et al. Stationary balance control of a bikebot[C]//Proc. of the IEEE International Confe-rence on Robotics and Automation, 2014: 6706-6711. |

| 6 | KEO L, YOSHINO K, KAWAGUCHI M, et al. Experimental results for stabilizing of a bicycle with a flywheel balancer[C]//Proc. of the International Conference on Robotics and Automation, 2011: 6150-6155. |

| 7 | LAM P Y , SIN T K . Gyroscopic stabilization of a self-balancing robt bicycle[J]. Automation Twchnology, 2011, 5 (6): 916- 923. |

| 8 | HE J, ZHAO M G. Control system design of self-balanced bicycles by control moment gyroscope[C]//Proc. of the China Intelligent Automation Academic Conference, 2015: 206-215. |

| 9 | HSIEH M H, CHEN Y T, CHI C H, et al. Fuzzy sliding mode control of a riderless bicycle with a gyroscopic balancer[C]//Proc. of the International Symposium on Robotic and Sensors Environments, 2014: 13-18. |

| 10 | JIAN F , HE T Y . The LQR controller design of two-wheeled self-balancing robot based on the particle swarm optimization algorithm[J]. Mathematical Problems in Engineering, 2014, 12, 1- 6. |

| 11 | 李润泽, 张宇飞, 陈海昕. 针对超临界翼型气动修型策略的强化学习研究[J]. 航空学报, 2020, 41 (10): 1- 18. |

| LI R Z , ZHANG Y F , CHEN H X . Study on reinforcement learning of aerodynamic modification strategy for supercritical airfoil[J]. Acta Aeronautica et Astronautica Sinica, 2020, 41 (10): 1- 18. | |

| 12 | MUKHOPADHYAY S, TILAK O, CHAKRABARTI S. Reinforcement learning algorithms for uncertain, dynamic, zero-sum games[C]//Proc. of the International Conference on Machine Learning and Applications, 2018: 48-54. |

| 13 | 邢强, 贾鑫, 朱卫. 基于Q-学习的智能雷达对抗[J]. 系统工程与电子技术, 2018, 40 (5): 1031- 1035. |

| XING Q , JIA X , ZHU W . Intelligent radar countermeasures based on Q-learning[J]. Systems Engineering and Electronics, 2018, 40 (5): 1031- 1035. | |

| 14 | 张晓路, 李斌, 常健, 等. 水下滑翔蛇形机器人滑翔控制的强化学习方法[J]. 机器人, 2019, 41 (3): 334- 342. |

| ZHANG X L , LI B , CHANG J , et al. Reinforcement learning method for gliding control of underwater gliding snake robot[J]. Robot, 2019, 41 (3): 334- 342. | |

| 15 | SUTTON R S , MCALLESTER D , SINGH S , et al. Policy gradient methods for reinforcement learning with function approximation[J]. Advances in Neural Information Processing Systems, 2000, 12, 1057- 1063. |

| 16 | 蒋国飞, 吴沧浦. 基于Q学习算法和BP神经网络的倒立摆控制[J]. 自动化学报, 1998, 24 (5): 662- 666. |

| JIANG G F , WU C P . Inverted pendulum control based on Q-learning algorithm and BP neural network[J]. Acta Automatica Sinica, 1998, 24 (5): 662- 666. | |

| 17 |

WANG Y , LIU Y T , CHEN W , et al. Target transfer Q-learning and its convergence analysis[J]. Neurocomputing, 2020, 392, 11- 22.

doi: 10.1016/j.neucom.2020.02.117 |

| 18 | BAIRD L C, KLOPF A H. Reinforcement learning with high-dimensional, continuous actions[EB/OL]. [2020-06-01]. https://xueshu.baidu.com/usercenter/paper/show?paperid=ce2d12b15ed2f32cbd8655240a6aca67&site=xueshu_se. |

| 19 | 王舒, 郑世强. 基于复合控制的磁悬浮CMG动框架效应抑制[J]. 北京航空航天大学学报, 2020, 46 (12): 2339- 2347. |

| WANG S , ZHENG S Q . Inhibition of dynamic frame effect of magnetic levitation CMG based on composite control[J]. Journal of Beijing University of Aeronautics and Astronautics, 2020, 46 (12): 2339- 2347. | |

| 20 |

ZENG W J , PAN S , CHEN L , et al. Research on ultra-low speed driving method of traveling wave ultrasonic motor for CMG[J]. Ultrasonics, 2020, 103, 106088.

doi: 10.1016/j.ultras.2020.106088 |

| 21 | 贾英宏, 赵楠, 徐世杰. 控制力矩陀螺驱动的空间机器人轨迹跟踪控制[J]. 北京航空航天大学学报, 2014, 40 (3): 285- 291. |

| JIA Y H , ZHAO N , XU S J . Control the trajectory tracking control of space robot driven by torque gyro[J]. Journal of Beijing University of Aeronautics and Astronautics, 2014, 40 (3): 285- 291. | |

| 22 |

郭磊, 黄用华, 廖启征, 等. 自平衡自行车机器人的运动学分析[J]. 北京邮电大学学报, 2011, 34 (6): 99- 102.

doi: 10.3969/j.issn.1007-5321.2011.06.023 |

|

GUO L , HUANG Y H , LIAO Q Z , et al. Kinematics analysis of self-balancing bicycle robot[J]. Journal of Beijing University of Posts and Telecommunications, 2011, 34 (6): 99- 102.

doi: 10.3969/j.issn.1007-5321.2011.06.023 |

|

| 23 | 王囡囡, 熊佳铭, 刘才山. 自行车动力学建模及稳定性分析研究综述[J]. 力学学报, 2020, 52 (4): 917- 927. |

| WANG N N , XIONG J M , LIU C S . A review of bicycle dynamics modeling and stability analysis[J]. Chinese Journal of Theoretical and Applied Mechanics, 2020, 52 (4): 917- 927. | |

| 24 | GETZ N H, JERROLD E M. Dynamic inversion of nonlinear maps with applications to nonlinear control and robotics[D]. Berkeley: University of California, 1995. |

| 25 | GUO L, LIAO Q Z, WEI S M, et al. A kind of bicycle robot dynamic modeling and nonlinear control[C]//Proc. of the International Conference on Information and Automation, 2010: 1613-1617. |

| 26 | KEO L, YAMAKITA M. Controlling balancer and steering for bicycle stabilization[C]//Proc. of the Intelligent Robots and Systems, 2009: 4541-4546. |

| 27 | WATKINS C J C H , DAYAN P . Q-learning[J]. Machine Learning, 1992, 8 (3): 279- 292. |

| 28 | SCHILPEROORT J, MAK I, DRUGAN M M, et al. Learning to play pac-xon with Q-learning and two double Q-learning variants[C]//Proc. of the Symposium Series on Computational Intelligence, 2018: 1151-1158. |

| 29 | DAS P K , BEHERA H S , PANIGRAHI B K . Intelligent-based multi-robot path planning inspired by improved classical Q-learning and improved particle swarm optimization with perturbed velocity[J]. Engineering Science and Technoloy, 2016, 19 (1): 651- 669. |

| 30 | SCHILPEROORT J, MAK I, DRUGAN M M, et al. Wiering learning to play pac-xon with Q-learning and two double Q-learning variants[C]//Proc. of the Symposium Series on Computational Intelligence, 2018: 1151-1158. |

| 31 | SUN C Y. Q-Learning: fundamental Q-learning algorithm in finding optimal policy[C]//Proc. of the International Conference on Smart Grid and Electrical Automation, 2017: 243-246. |

| 32 | LI X X , PENG Z H , JIAO L , et al. Online adaptive Q-learning method for fully cooperative linear quadratic dynamic games[J]. Science China (Information Sciences), 2019, 62 (12): 164- 177. |

| 33 | ZHANG W Z , LYU T S . Reactive fuzzy controller design by Q-learning for mobile robot navigation[J]. Journal of Harbin Institute of Technology, 2005, 3, 319- 324. |

| [1] | Wenming ZHOU, Dekang CUI, Jingyi ZHOU, Mingming ZHANG, Anshi ZHU. Hybrid algorithm for evaluation of support capability of storage and supply base [J]. Systems Engineering and Electronics, 2022, 44(9): 2832-2839. |

| [2] | Bakun ZHU, Weigang ZHU, Wei LI, Ying YANG, Tianhao GAO. Research on decision-making modeling of cognitive jamming for multi-functional radar based on Markov [J]. Systems Engineering and Electronics, 2022, 44(8): 2488-2497. |

| [3] | Guan WANG, Haizhong RU, Dali ZHANG, Guangcheng MA, Hongwei XIA. Design of intelligent control system for flexible hypersonic vehicle [J]. Systems Engineering and Electronics, 2022, 44(7): 2276-2285. |

| [4] | Lingyu MENG, Bingli GUO, Wen YANG, Xinwei ZHANG, Zuoqing ZHAO, Shanguo HUANG. Network routing optimization approach based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(7): 2311-2318. |

| [5] | Dongzi GUO, Rong HUANG, Hechuan XU, Liwei SUN, Naigang CUI. Research on deep deterministic policy gradient guidance method for reentry vehicle [J]. Systems Engineering and Electronics, 2022, 44(6): 1942-1949. |

| [6] | Mingren HAN, Yufeng WANG. Optimization method for orbit transfer of all-electric propulsion satellite based on reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(5): 1652-1661. |

| [7] | Li HE, Liang SHEN, Hui LI, Zhuang WANG, Wenquan TANG. Survey on policy reuse in reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(3): 884-899. |

| [8] | Bakun ZHU, Weigang ZHU, Wei LI, Ying YANG, Tianhao GAO. Multi-function radar intelligent jamming decision method based on prior knowledge [J]. Systems Engineering and Electronics, 2022, 44(12): 3685-3695. |

| [9] | Qingqing YANG, Yingying GAO, Yu GUO, Boyuan XIA, Kewei YANG. Target search path planning for naval battle field based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(11): 3486-3495. |

| [10] | Bin ZENG, Hongqiang ZHANG, Houpu LI. Research on anti-submarine strategy for unmanned undersea vehicles [J]. Systems Engineering and Electronics, 2022, 44(10): 3174-3181. |

| [11] | Qitian WAN, Baogang LU, Yaxin ZHAO, Qiuqiu WEN. Autopilot parameter rapid tuning method based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(10): 3190-3199. |

| [12] | Bin ZENG, Rui WANG, Houpu LI, Xu FAN. Scheduling strategies research based on reinforcement learning for wartime support force [J]. Systems Engineering and Electronics, 2022, 44(1): 199-208. |

| [13] | Zhiwei JIANG, Yang HUANG, Qihui WU. Anti-interference frequency allocation based on kernel reinforcement learning [J]. Systems Engineering and Electronics, 2021, 43(6): 1547-1556. |

| [14] | Jiayi LIU, Shaohua YUE, Gang WANG, Xiaoqiang YAO, Jie ZHANG. Cooperative evolution algorithm of multi-agent system under complex tasks [J]. Systems Engineering and Electronics, 2021, 43(4): 991-1002. |

| [15] | Chen LI, Yanyan HUANG, Yongliang ZHANG, Tiande CHEN. Multi-agent decision-making method based on Actor-Critic framework and its application in wargame [J]. Systems Engineering and Electronics, 2021, 43(3): 755-762. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||