Systems Engineering and Electronics ›› 2025, Vol. 47 ›› Issue (10): 3300-3312.doi: 10.12305/j.issn.1001-506X.2025.10.17

• Systems Engineering • Previous Articles

Continual learning mechanism for intelligent flight conflict resolution algorithm

Dong SUI, Xiangrong CAI

- College of Civil Aviation,Nanjing University of Aeronautics and Astronautics,Nanjing 211106,China

-

Received:2024-04-11Online:2025-10-25Published:2025-10-23 -

Contact:Xiangrong CAI

CLC Number:

Cite this article

Dong SUI, Xiangrong CAI. Continual learning mechanism for intelligent flight conflict resolution algorithm[J]. Systems Engineering and Electronics, 2025, 47(10): 3300-3312.

share this article

Table 2

Experiment models setup"

| 基础模型 | 算法 | 训练数据集 | 所得模型 |

| 无 | ACKTR | ||

| 无 | ACKTR | ||

| 无 | ACKTR | ||

| 无 | ACKTR | ||

| ACKTR +EWC | |||

| ACKTR+EWC | |||

| ACKTR +MAML | |||

| ACKTR +MAML |

Table 3

Hyper parameter settings of conflict resolution experiment based on EWC algorithm"

| 超参数 | 参数值 | 超参数 | 参数值 | |

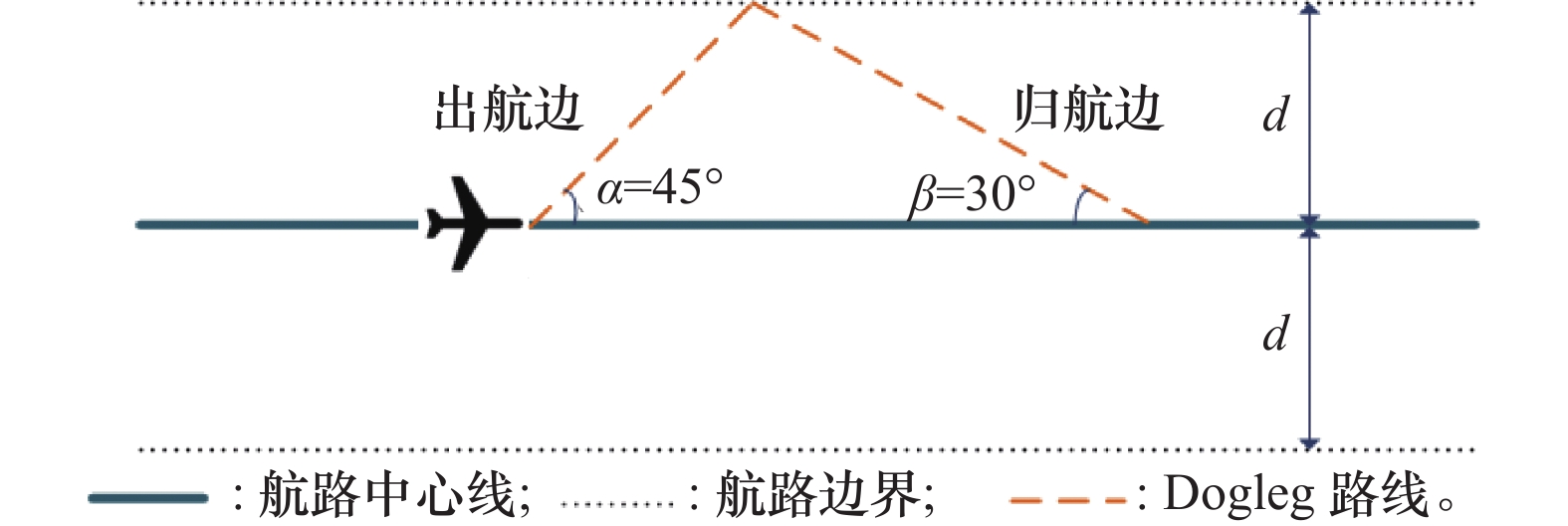

| 策略网络类型 | MLP | 策略网络层数 | 6 | |

| 策略网络隐藏层节点数 | 64 | 策略网络更新频率 | 1 | |

| 价值函数网络类型 | MLP | 价值函数网络层数 | 6 | |

| 价值函数网络隐藏层节点数 | 64 | 价值函数网络更新频率 | 1 | |

| 策略网络学习率 | 5e-4 | 价值函数网络学习率 | 5e-4 | |

| 折扣因子 | 0.99 | 批采样数 | 32 | |

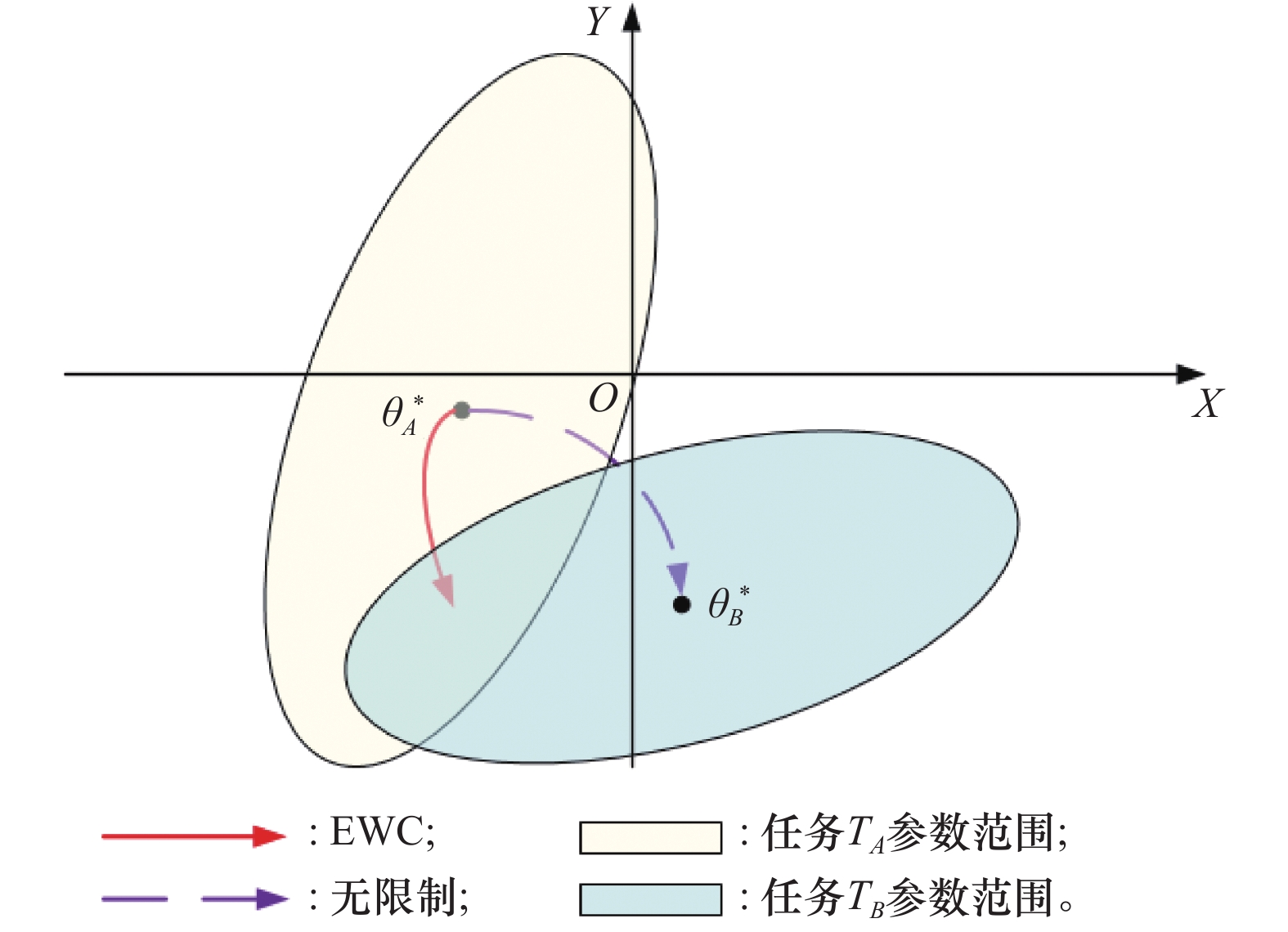

| 训练步长 | 1e6 | 策略损失系数 | 0.1 | |

| 值损失系数 | 0.1 | 熵损失系数 | 0.01 | |

| 旧任务重要性参数 | — | — |

Table 5

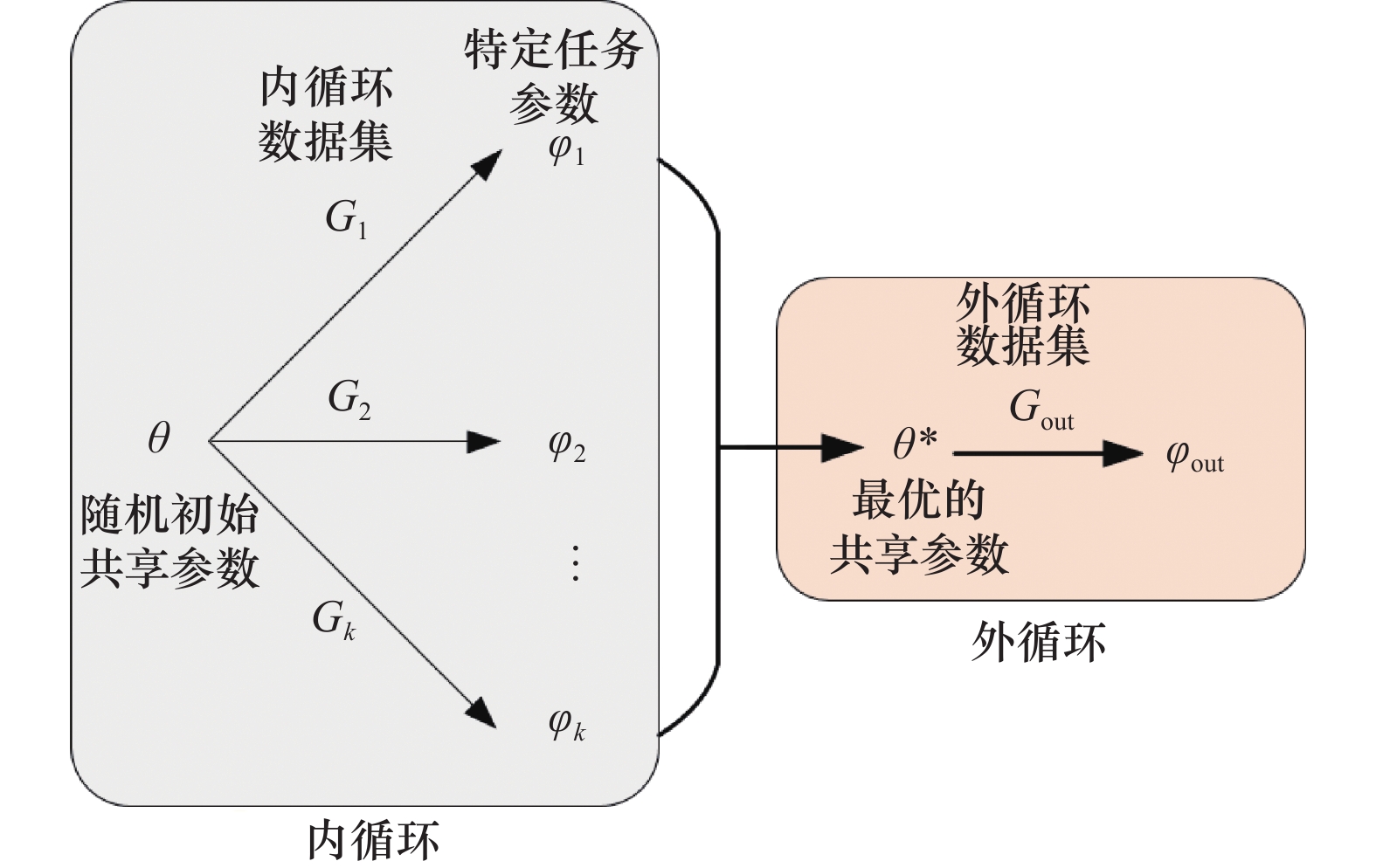

Hyper parameter settings of conflict resolution experiment based on MAML algorithm"

| 超参数 | 参数值 | 超参数 | 参数值 | ||

| 策略网络类型 | MLP | 策略网络层数 | 6 | ||

| 策略网络隐藏层节点数 | 64 | 策略网络更新频率 | 1 | ||

| 价值函数网络类型 | MLP | 价值函数网络层数 | 6 | ||

| 价值函数网络隐藏层节点数 | 64 | 价值函数网络更新频率 | 1 | ||

| 策略网络学习率 | 5e-4 | 价值函数网络学习率 | 5e-4 | ||

| 折扣因子 | 0.99 | 批采样数 | 32 | ||

| 内循环训练步长 | 1e6 | 外循环训练步长 | 1e6 | ||

| 值损失系数 | 0.1 | 熵损失系数 | 0.01 | ||

| 策略损失系数 | 0.1 | 内循环任务数量 | 2 |

Table 8

Comparison of results between two experiments %"

| 模型 | 原场景 | 训练集SR | CLR |

| 93.15 | 87.58 | ||

| 90.06 | 87.44 | ||

| 94.55 | 89.27 | ||

| 94.55 | 89.08 | ||

| 91.88 | 88.73 | ||

| 91.88 | 88.95 |

| 1 |

CAFIERI S, DURAND N. Aircraft deconfliction with speed regulation: new models from mixed-integer optimization[J]. Journal of Global Optimization, 2014, 58 (4): 613- 629.

doi: 10.1007/s10898-013-0070-1 |

| 2 | CAFIERI S, REY D. Maximizing the number of conflict-free aircraft using mixed-integer nonlinear programming[J]. Computers & Operations Research, 2017, 80, 147- 158. |

| 3 |

CAFIERI S, OMHENI R. Mixed-integer nonlinear programming for aircraft conflict avoidance by sequentially applying velocity and heading angle changes[J]. European Journal of Operational Research, 2017, 260 (1): 283- 290.

doi: 10.1016/j.ejor.2016.12.010 |

| 4 | YAO Z, ZHANG L, XIAO H Q, et al. Modeling and detection of low-altitude flight conflict network based on SVM[J]. Measurement: Sensors, 2024, 31, 100954. |

| 5 |

WANG Z, LI H, WANG J F, et al. Deep reinforcement learning based conflict detection and resolution in air traffic control[J]. IET Intelligent Transport Systems, 2019, 13 (6): 1041- 1047.

doi: 10.1049/iet-its.2018.5357 |

| 6 |

SUI D, XU W P. Study on the resolution of multi-aircraft flight conflicts based on an IDQN[J]. Chinese Journal of Aeronautics, 2022, 35 (2): 195- 213.

doi: 10.1016/j.cja.2021.03.015 |

| 7 | BRITTAIN M, WEI P. One to any: distributed conflict resolution with deep multi-agent reinforcement learning and long short-term memory[C]//Proc. of the AIAA SciTech Forum and Exposition, 2021: 2503−2512. |

| 8 |

BRITTAIN M W, WEI P. Scalable autonomous separation assurance with heterogeneous multi-agent reinforcement learning[J]. IEEE Trans. on Automation Science and Engineering, 2022, 19 (4): 2837- 2848.

doi: 10.1109/TASE.2022.3151607 |

| 9 | PHAM D T, TRAN N P, GOH S K, et al. Reinforcement learning for two-aircraft conflict resolution in the presence of uncertainty[C]//Proc. of the IEEE-RIVF International Conference on Computing and Communication Technologies, 2019. |

| 10 |

PHAM D T, TRAN N P, ALAM S. Deep reinforcement learning based path stretch vector resolution in dense traffic with uncertainties[J]. Transportation research Part C: Emerging Technologies, 2022, 135, 103463.

doi: 10.1016/j.trc.2021.103463 |

| 11 | 隋东, 董金涛. 基于相对熵逆强化学习的飞行冲突解脱方法[J]. 安全与环境学报, 2024, 24 (3): 1070- 1078. |

| SUI D, DONG J T. Flight conflict resolution method based on relative entropy inverse reinforcement learning[J]. Journal of Safety and Environment, 2024, 24 (3): 1070- 1078. | |

| 12 |

PAPADOPOULOS G, BASTAS A, VOUROS G A, et al. Deep reinforcement learning in service of air traffic controllers to resolve tactical conflicts[J]. Expert Systems with Applications, 2024, 236, 121234.

doi: 10.1016/j.eswa.2023.121234 |

| 13 | RIBEIRO M, ELLERBROEK J, HOEKSTRA J. Determining optimal conflict avoidance manoeuvres at high densities with reinforcement learning[C]//Proc. of the 10th SESAR Innovation Days, 2020: 7−10. |

| 14 | RIBEIRO M, ELLERBROEK J, HOEKSTRA J. Improvement of conflict detection and resolution at high densities through reinforcement learning[C]//Proc. of the International Conference on Research in Air Transportation, 2020: 23−26. |

| 15 |

江未来, 徐国强, 王耀南. 一种无人机自主避障与目标追踪方法[J]. 宇航学报, 2022, 43 (6): 802- 810.

doi: 10.3873/j.issn.1000-1328.2022.06.011 |

|

JIANG W L, XU G Q, WANG Y N. An autonomous obstacle avoidance and target tracking method for UAV[J]. Journal of Astronautics, 2022, 43 (6): 802- 810.

doi: 10.3873/j.issn.1000-1328.2022.06.011 |

|

| 16 | 魏志强, 商谢睿. 考虑环境影响的自由航路空域无冲突飞行规划[J]. 安全与环境学报, 2023, 23 (9): 3297- 3306. |

| WEI Z Q, SHANG X R. Conflict-free flight planning in free route airspace considering the influence of the environment[J]. Journal of Safety and Environment, 2023, 23 (9): 3297- 3306. | |

| 17 | 毕可心, 吴明功, 温祥西, 等. 基于飞行冲突网络和遗传算法的冲突解脱策略[J]. 系统工程与电子技术, 2023, 45 (5): 1429- 1440. |

| BI K X, WU M G, WEN X X, et al. Conflict resolution strategy based on flight conflict network and genetic algorithm[J]. Systems Engineering and Electronics, 2023, 45 (5): 1429- 1440. | |

| 18 | 陈锦辉, 田勇, 孙梦圆, 等. 基于时空棱锥的航迹冲突解脱策略研究[J]. 航空计算技术, 2024, 54 (1): 57- 61. |

| CHEN J H, TIAN Y, SUN M Y, et al. Study on trajectory conflict resolution strategies based on space-time prism[J]. Aviation Computing Technology, 2024, 54 (1): 57- 61. | |

| 19 |

PANG B Z, LOW K H, LV C. Adaptive conflict resolution for multi-UAV 4D routes optimization using stochastic fractal search algorithm[J]. Transportation Research Part C, 2022, 139, 103666.

doi: 10.1016/j.trc.2022.103666 |

| 20 |

SUI D, MA C Y, WEI C J. Tactical conflict solver assisting air traffic controllers using deep reinforcement learning[J]. Aerospace, 2023, 10 (2): 182.

doi: 10.3390/aerospace10020182 |

| 21 |

VERWIMP E, YANG K, PARISOT S, et al. CLAD: a realistic continual learning benchmark for autonomous driving[J]. Neural Networks, 2023, 161, 659- 669.

doi: 10.1016/j.neunet.2023.02.001 |

| 22 | CHOI S Y, KIM W J, KIM S W, et al. DSLR: diversity enhancement and structure learning for rehearsal-based graph continual learning[EB/OL]. [2024-03-11]. https: //arxiv.org/abs/2402.13711. |

| 23 |

KHETARPAL K, RIEMER M, RISH I, et al. Towards continual reinforcement learning: a review and perspectives[J]. Journal of Artificial Intelligence Research, 2022, 75, 1401- 1476.

doi: 10.1613/jair.1.13673 |

| 24 |

WANG L Y, ZHANG X X, SU H, et al. A comprehensive survey of continual learning: theory, method and application[J]. IEEE Trans. on Pattern Analysis and Machine Intelligence, 2024, 46 (8): 5362- 5383.

doi: 10.1109/TPAMI.2024.3367329 |

| 25 | DANIELS Z, RAGHAVAN A, HOSTETLER J, et al. Model-free generative replay for lifelong reinforcement learning: application to starcraft-2[EB/OL]. [2024-03-11]. https: //arxiv.org/abs/2208.05056. |

| 26 | TIWARI R, KILLAMSETTY K, LYER R, et al. GCR: gradient coreset based replay buffer selection for continual learning[C]//Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 99−108. |

| 27 | BENZING F. Unifying regularisation methods for continual learning[EB/OL]. [2024-03-11]. https: //arxiv.org/abs/2006.06357. |

| 28 | ZENKE F, POOLE B, GANGULI S. Continual learning through synaptic intelligence[C]//Proc. of the International Conference on Machine Learning, 2017: 3987−3995. |

| 29 | KAPOOR S, KARALETSOS T, BUI T D. Variational auto-regressive Gaussian processes for continual learning[C]//Proc. of the International Conference on Machine Learning, 2021. |

| 30 | HOSPEDALES T, ANTONIOU A, MIVAELLI P, et al. Meta-learning in neural networks: a survey[J]. IEEE Trans. on Pattern Analysis and Machine Intelligence, 2021, 44 (9): 5149- 5169. |

| 31 | ALET F, SCHNEIDER M F, LOZANO-PEREZ T, et al. Meta-learning curiosity algorithms[EB/OL]. [2024-03-11]. https: //arxiv.org/abs/2003.05325. |

| 32 |

KIRKPATRICK J, PASCANU R, RABINOWITZ N, et al. Overcoming catastrophic forgetting in neural networks[J]. Proceedings of the National Academy of Sciences, 2017, 114 (13): 3521- 3526.

doi: 10.1073/pnas.1611835114 |

| 33 |

MACKAY D. A practical Bayesian framework for backpropagation networks[J]. Neural Computation, 1992, 4 (3): 448- 472.

doi: 10.1162/neco.1992.4.3.448 |

| 34 | FINN C, ABBEEL P, LEVINE S. Model-agnostic meta-learning for fast adaptation of deep networks[C]//Proc. of the 34th International Conference on Machine Learning, 2017, 70: 1126−1135. |

| [1] | Xiaolong WEI, Yarong WU, Dengkai YAO, Guhao ZHAO. Hierarchical decision-making algorithm for UAV air combat maneuvering based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2025, 47(9): 2993-3003. |

| [2] | Yundou ZHU, Haiquan SUN, Xiaoxuan HU. Multi-satellite cooperative imaging task planning method based on pointer network architecture [J]. Systems Engineering and Electronics, 2025, 47(7): 2246-2255. |

| [3] | Linzhi MENG, Xiaojuan SUN, Yuxin HU, Bin GAO, Guoqing SUN, Wenhao MU. Reinforcement learning task scheduling algorithm for satellite on-orbit processing [J]. Systems Engineering and Electronics, 2025, 47(6): 1917-1929. |

| [4] | Kangjie ZHENG, Xinyu ZHANG, Weisong WANG, Zhensheng LIU. Intelligent ship dynamic autonomous obstacle avoidance decision based on DQN and rule [J]. Systems Engineering and Electronics, 2025, 47(6): 1994-2001. |

| [5] | Shuhan LIU, Tong LI, Fuqiang LI, Chungang YANG. Intent and situation-dual driven anti-jamming communication mechanism for data link [J]. Systems Engineering and Electronics, 2025, 47(6): 2055-2064. |

| [6] | Wei XIONG, Dong ZHANG, Zhi REN, Shuheng YANG. Research on intelligent decision-making methods for coordinated attack by manned aerial vehicles and unmanned aerial vehicles [J]. Systems Engineering and Electronics, 2025, 47(4): 1285-1299. |

| [7] | Peng MA, Rui JIANG, Bin WANG, Mengfei XU, Changbo HOU. Strategy reconstruction for resilience against intelligence jamming based on implicit opponent modeling [J]. Systems Engineering and Electronics, 2025, 47(4): 1355-1363. |

| [8] | Kaiqiang TANG, Huiqiao FU, Jiasheng LIU, Guizhou DENG, Chunlin CHEN. Hierarchical optimization research of constrained vehicle routing based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2025, 47(3): 827-841. |

| [9] | Xiarong CHEN, Jichao LI, Gang CHEN, Peng LIU, Jiang JIANG. Portfolio of weapon system-of-systems based on heterogeneous information networks [J]. Systems Engineering and Electronics, 2025, 47(3): 855-861. |

| [10] | Yaozhong ZHANG, Zhuoran WU, Jiandong ZHANG, Qiming YANG, Guoqing SHI, Zixiang XU. UAV many-to-one pursuit-evasion game based on ME-DDPG algorithm [J]. Systems Engineering and Electronics, 2025, 47(10): 3288-3299. |

| [11] | Tingyu ZHANG, Ying ZENG, Nan LI, Hongzhong HUANG. Spacecraft power-signal composite network optimization algorithm based on DRL [J]. Systems Engineering and Electronics, 2024, 46(9): 3060-3069. |

| [12] | Yuqi XIA, Yanyan HUANG, Qia CHEN. Path planning for unmanned vehicle reconnaissance based on deep Q-network [J]. Systems Engineering and Electronics, 2024, 46(9): 3070-3081. |

| [13] | Zhipeng YANG, Zihao CHEN, Chang ZENG, Song LIN, Jindi MAO, Kai ZHANG. Online route planning decision-making method of aircraft in complex environment [J]. Systems Engineering and Electronics, 2024, 46(9): 3166-3175. |

| [14] | Hongda GUO, Jingtao LOU, Youchun XU, Peng YE, Yongle LI, Jinsheng CHEN. Event-triggered communication of multiple unmanned ground vehicles collaborative based on MADDPG [J]. Systems Engineering and Electronics, 2024, 46(7): 2525-2533. |

| [15] | Mengyu ZHANG, Yajie DOU, Ziyi CHEN, Jiang JIANG, Kewei YANG, Bingfeng GE. Review of deep reinforcement learning and its applications in military field [J]. Systems Engineering and Electronics, 2024, 46(4): 1297-1308. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||