Systems Engineering and Electronics ›› 2022, Vol. 44 ›› Issue (3): 977-985.doi: 10.12305/j.issn.1001-506X.2022.03.30

• Guidance, Navigation and Control • Previous Articles Next Articles

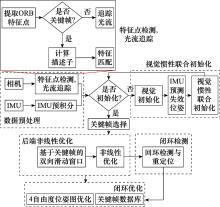

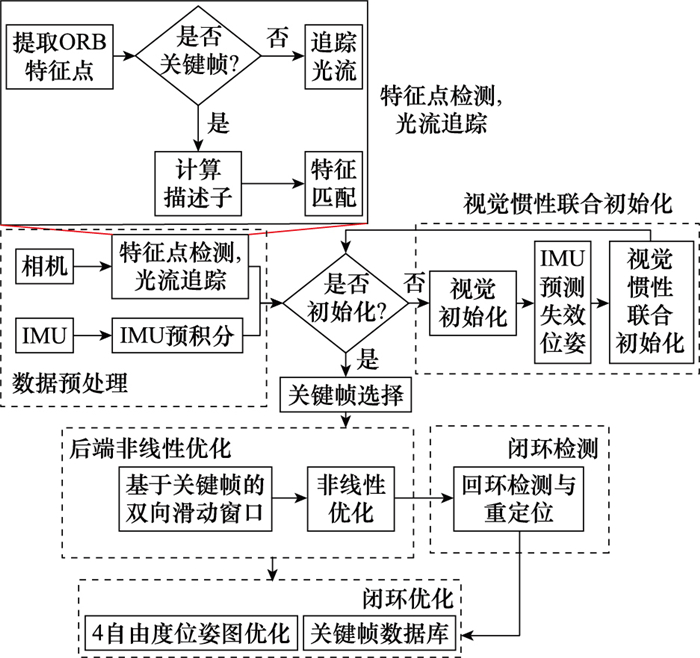

Visual-inertial SLAM method based on multi-scale optical flow fusion feature point

Tongdian WANG, Jieyu LIU*, Zongshou WU, Qiang SHEN, Erliang YAO

- College of Missile Engineering, Rocket Force University of Engineering, Xi'an 710025, China

-

Received:2021-07-02Online:2022-03-01Published:2022-03-10 -

Contact:Jieyu LIU

CLC Number:

Cite this article

Tongdian WANG, Jieyu LIU, Zongshou WU, Qiang SHEN, Erliang YAO. Visual-inertial SLAM method based on multi-scale optical flow fusion feature point[J]. Systems Engineering and Electronics, 2022, 44(3): 977-985.

share this article

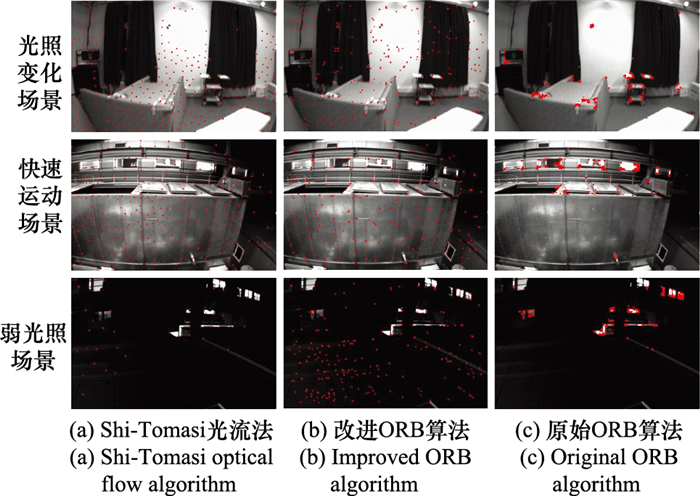

Table 2

Multi-scene algorithm data index comparison"

| 算法 | 光照变化场景 | 弱光照场景 | 快速运动场景 | |||||||||||

| 特征点 | 匹配率/% | 耗时/ms | 均匀度 | 特征点 | 匹配率/% | 耗时/ms | 均匀度 | 特征点 | 匹配率/% | 耗时/ms | 均匀度 | |||

| ORB算法 | 491 | 81.4 | 24.05 | 0.28 | 495 | 88.0 | 29.52 | 0.24 | 497 | 78.20 | 23.26 | 0.32 | ||

| Shi-Tomasi光流 | 136 | 64.7 | 16.89 | 0.68 | 72 | 89.2 | 14.26 | 0.38 | 366 | 88.25 | 20.39 | 0.78 | ||

| 本文ORB算法 | 365 | 68.2 | 21.6 | 0.79 | 295 | 81.1 | 20.56 | 0.75 | 298 | 91.60 | 21.41 | 0.82 | ||

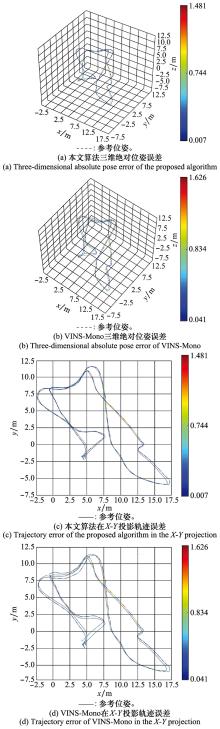

Table 3

Comparison of the error quantification index between the proposed algorithm and VINS-Mono"

| 序列 | MH-05-difficult | |

| VINS-Mono | 本文算法 | |

| Max | 1.510 9 | 1.480 7 |

| Mean | 0.448 1 | 0.350 0 |

| Median | 0.409 0 | 0.322 9 |

| Min | 0.016 9 | 0.007 2 |

| Rmse | 0.486 1 | 0.406 9 |

| Std | 0.188 3 | 0.207 6 |

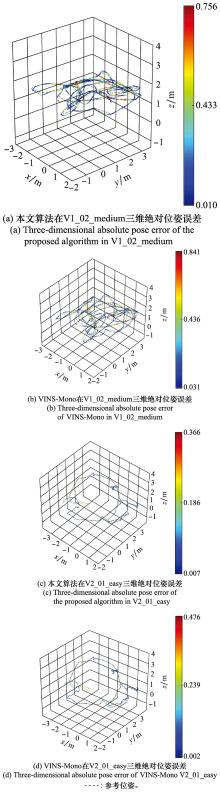

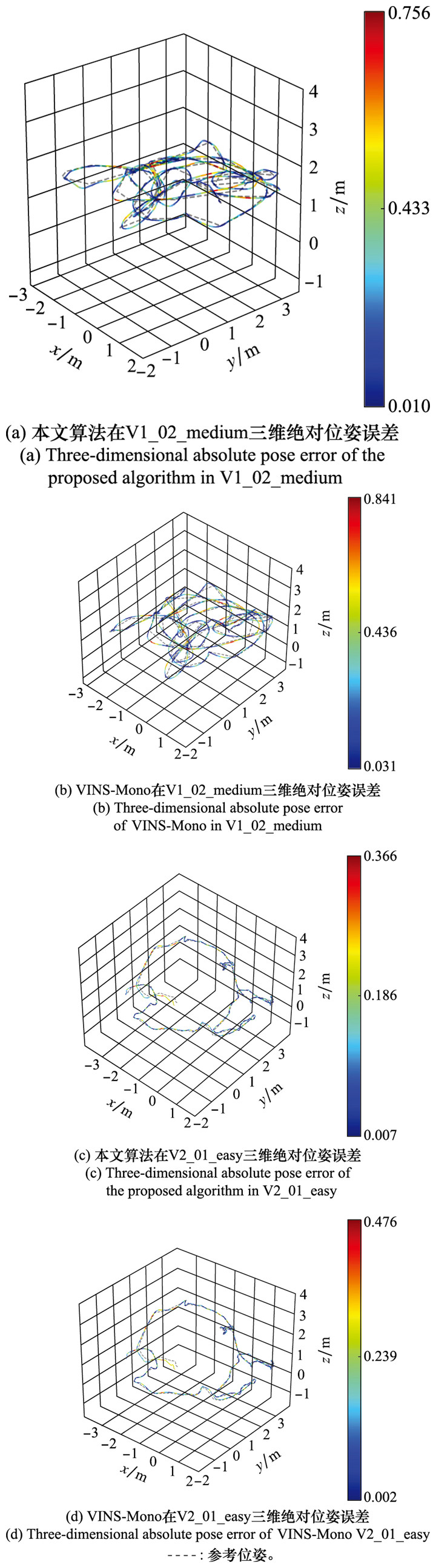

| 序列 | V1-02-medium | |

| VINS-Mono | 本文算法 | |

| Max | 0.841 0 | 0.756 1 |

| Mean | 0.256 5 | 0.242 5 |

| Median | 0.210 0 | 0.198 8 |

| Min | 0.031 1 | 0.009 5 |

| Rmse | 0.308 2 | 0.300 6 |

| Std | 0.170 8 | 0.163 2 |

| 序列 | V2-01-easy | |

| VINS-Mono | 本文算法 | |

| Max | 0.475 8 | 0.365 9 |

| Mean | 0.117 8 | 0.106 8 |

| Median | 0.101 1 | 0.090 1 |

| Min | 0.002 4 | 0.006 7 |

| Rmse | 0.137 1 | 0.125 6 |

| Std | 0.070 0 | 0.066 1 |

| 1 | 张国良, 姚二亮, 林志林, 等. 融合直接法与特征法的快速双目SLAM算法[J]. 机器人, 2017, 39 (6): 879- 888. |

| ZHANG G L , YAO E L , LIN Z L , et al. Fast binocular SLAM algorithm combining the direct method and the feature-based method[J]. Robot, 2017, 39 (6): 879- 888. | |

| 2 | 施俊屹, 查富生, 孙立宁, 等. 移动机器人视觉惯性SLAM研究进展[J]. 机器人, 2020, 42 (6): 734- 748. |

| SHI J Y , ZHA F S , SUN L N , et al. A survey of visual-inertial SLAM for mobile robots[J]. Robot, 2020, 42 (6): 734- 748. | |

| 3 | WANG P F, CHEN N, ZHA F S, et al. Research on adaptive Monte Carlo location algorithm aided by ultra-wideband array[C]// Proc. of the IEEE 13th World Congress on Intelligent Control and Automation, 2018: 566-571. |

| 4 | 张小国, 刘启汉, 李尚哲, 等. 基于双步边缘化与关键帧筛选的改进视觉惯性SLAM方法[J]. 中国惯性技术学报, 2020, 28 (5): 608- 614. |

| ZHANG X G , LIU Q H , LI S Z , et al. An approach to improve VI-SLAM based on two-step marginalization and keyframe selection method[J]. Journal of Chinese Inertial Technology, 2020, 28 (5): 608- 614. | |

| 5 |

GUI J J , GU D B , WANG S , et al. A review of visual inertial odometry from filtering and optimisation perspectives[J]. Advanced Robotics, 2015, 29 (20): 1289- 1301.

doi: 10.1080/01691864.2015.1057616 |

| 6 | SMITH R , SELF M , CHEESEMAN P . Estimating uncertain spatial relationships in robotics[J]. Machine Intelligence & Pattern Recognition, 1988, 5 (5): 435- 461. |

| 7 |

JONES E S , SOATTO S . Visual-inertial navigation, mapping and localization: a scalable real-time causal approach[J]. International Journal of Robotics Research, 2011, 30 (4): 407- 430.

doi: 10.1177/0278364910388963 |

| 8 |

KELLY J , SUKHATME G S . Visual-inertial sensor fusion: localization, mappingand sensor-to-sensor self-calibration[J]. International Journal of Robotics Research, 2011, 30 (1): 56- 79.

doi: 10.1177/0278364910382802 |

| 9 | MOURIKIS A I, ROUMELIOTIS S I. A multi-state constraint Kalman filter for vision-aided inertial navigation[C]//Proc. of the IEEE International Conference on Robotics and Automation, 2007: 3565-3572. |

| 10 | BLOESCH M, OMARI S, HUTTER M, et al. Robust visual inertial odometry using a direct EKF-based approach[C]//Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 2015: 298-304. |

| 11 | LEUTENEGGER S , LYENE S , BOSSE M , et al. Keyframe-based visual inertial odometry using nonlinear optimization[J]. International Journal of Robotics Research, 2014, 34 (3): 314- 334. |

| 12 | 夏琳琳, 沈冉, 迟德儒, 等. 一种基于光流-线特征的单目视觉-惯性SLAM算法[J]. 中国惯性技术学报, 2020, 28 (5): 568- 575. |

| XIA L L , SHEN R , CHI D R , et al. An optical flow-line feature based monocular visual-inertial SLAM algorithm[J]. Journal of Chinese Inertial Technology, 2020, 28 (5): 568- 575. | |

| 13 |

MUR-ARTAL R , TARDOS J D . Visual-inertial monocular SLAM with map reuse[J]. IEEE Robotics and Automation Letters, 2017, 2 (2): 796- 803.

doi: 10.1109/LRA.2017.2653359 |

| 14 |

MUR-ARTAL R , MONTIEL J M M , TARDOS J D . ORB-SLAM: a versatile and accurate monocular SLAM system[J]. IEEE Trans.on Robotics, 2015, 31 (5): 1147- 1163.

doi: 10.1109/TRO.2015.2463671 |

| 15 | FORSTER C, PIZZOLI M, SCARAMUZZA D. SVO: fast semi-direct monocular visual odometry[C]//Proc. of the IEEE International Conference on Robotics and Automation, 2014: 15-22. |

| 16 |

FORSTER C , ZHANG Z , GASSNER M , et al. SVO: semidirect visual odometry for monocular and multicamera systems[J]. IEEE Trans.on Robotics, 2017, 33 (2): 249- 265.

doi: 10.1109/TRO.2016.2623335 |

| 17 | 龚赵慧, 张霄力, 彭侠夫, 等. 基于视觉惯性融合的半直接单目视觉里程计[J]. 机器人, 2020, 42 (5): 595- 605. |

| GONG Z H , ZHANG X L , PENG X F , et al. Semi-direct monocular visual odometry based on visual-inertial fusion[J]. Robot, 2020, 42 (5): 595- 605. | |

| 18 | 刘艳娇, 张云洲, 荣磊, 等. 基于直接法与惯性测量单元融合的视觉里程计[J]. 机器人, 2019, 41 (5): 683- 689. |

| LIU Y J , ZHANG Y Z , RONG L , et al. Visual odometry based on the direct method and the inertial measurement unit[J]. Robot, 2019, 41 (5): 683- 689. | |

| 19 |

QIN T , LI P L , SHEN S J . VINS-Mono: a robust and versatile monocular visual-inertial state estimator[J]. IEEE Trans.on Robotics, 2018, 34 (4): 1004- 1020.

doi: 10.1109/TRO.2018.2853729 |

| 20 | TATENO K, TOMBARI F, LAINA I, et al. CNN-SLAM: real-time dense monocular SLAM with learned depth prediction[C]// Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 2017: 6565-6574. |

| 21 | 齐乃新, 杨小冈, 李小峰, 等. 基于ORB特征和LK光流的视觉里程计算法[J]. 仪器仪表学报, 2018, 39 (12): 216- 227. |

| QI N X , YANG X G , LI X F , et al. Visual odometry algorithm based on ORB features and LK optical flow[J]. Chinese Journal of Scientific Instrument, 2018, 39 (12): 216- 227. | |

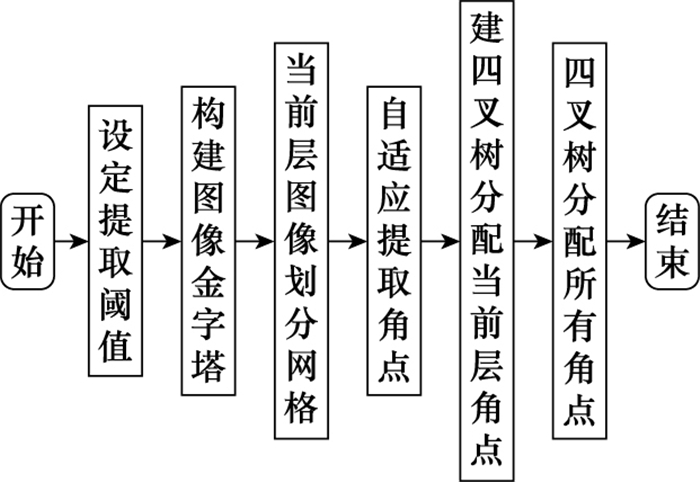

| 22 | 刘宏伟, 余辉亮, 梁艳阳. ORB特征四叉树均匀分布算法[J]. 自动化仪表, 2018, 39 (5): 52- 54, 59. |

| LIU H W , YU H L , LIANG Y Y . ORB feature quadtree uniform distribution algorithm[J]. Process Automation Instrumentation, 2018, 39 (5): 52- 54, 59. | |

| 23 | FURGALE P, REHDER J, SIEGWART R. Unified temporal and spatial calibration for multi-sensor systems[C]//Proc. of the IEEE /RSJ International Conference on Intelligent Robots and Systems, 2013: 1280-1286. |

| 24 | BAY H , TUYTELAARS T , GOOL L V . SURF: speeded up robust features[J]. Computer Vision & Image Understanding, 2006, 110 (3): 404- 417. |

| 25 | 姚晋晋, 张鹏超, 王彦, 等. 基于改进四叉树的ORB特征均匀分布算法[J]. 计算机工程与设计, 2020, 41 (6): 1629- 1634. |

| YAO J J , ZHANG P C , WANG Y , et al. ORB feature uniform distribution algorithm based on improved quadtree[J]. Computer Engineering and Design, 2020, 41 (6): 1629- 1634. | |

| 26 | 郑驰, 项志宇, 刘济林. 融合光流与特征点匹配的单目视觉里程计[J]. 浙江大学学报(工学版), 2014, 48 (2): 279- 284. |

| ZHENG C , XIANG Z Y , LIU J L . Monocular vision odometry based on the fusion of optical flow and feature points matching[J]. Journal of Zhejiang University(Engineering Science), 2014, 48 (2): 279- 284. | |

| 27 |

ZHOU J M , ZHAO X M , CHENG X , et al. Vehicle ego-localization based on streetscape image database under blind area of global positioning system[J]. Journal of Shanghai Jiaotong University (Science), 2019, 24 (1): 122- 129.

doi: 10.1007/s12204-018-2008-8 |

| 28 |

FORSTER C , CARLONE L , DELLAERT F , et al. On-manifold preintegration for real-time visual-inertial odometry[J]. IEEE Trans.on Robotics, 2017, 33 (1): 1- 21.

doi: 10.1109/TRO.2016.2597321 |

| 29 | LOWRY S , SUNDERHAUF N , NEWMAN P , et al. Visual place recognition: a survey[J]. IEEE Trans.on Robotics, 2015, 32 (1): 1- 19. |

| 30 |

BURRI M , NIKOLIC J , GOHL P , et al. The EuRoC micro aerial vehicle datasets[J]. International Journal of Robotics Research, 2016, 35 (10): 1157- 1163.

doi: 10.1177/0278364915620033 |

| [1] | Shuang CONG, Kun ZHANG. Online quantum state estimation optimization algorithm based on Kalman filter [J]. Systems Engineering and Electronics, 2021, 43(6): 1636-1643. |

| [2] | Tianqi HUANG, Buhong WANG, Jiwei TIAN. False target deception jamming optimized method for centralized netted radar [J]. Systems Engineering and Electronics, 2020, 42(7): 1484-1490. |

| [3] | XU Hong, XIE Wenchong, WANG Yongliang. Gaussian sum cubature Kalman tracking filter with angle glint noise [J]. Systems Engineering and Electronics, 2019, 41(2): 229-235. |

| [4] | LIU Yu, LIU Jun, XU Cong’an, WANG Cong, QI Lin, DING Ziran. Distributed consensus state estimation algorithm in asymmetrical networks [J]. Systems Engineering and Electronics, 2018, 40(9): 1917-1925. |

| [5] | LIN Xiaogong, JIAO Yuzhao, LI Heng, LIANG Kun, NIE Jun. Smoothing variable structure filter based on cubature transform and its application [J]. Systems Engineering and Electronics, 2018, 40(1): 159-164. |

| [6] | GUO Jian-bin, JI Ding-fei, WANG Xin, ZENG Sheng-kui, ZHAO Jian-yu. Hybrid state estimation and fault diagnosis algorithm of hybrid systems using particle filter [J]. Systems Engineering and Electronics, 2015, 37(8): 1936-1942. |

| [7] | ZHOU Meng, WANG Zhenhua, SHEN Yi. Adaptive reset observer design method [J]. Systems Engineering and Electronics, 2015, 37(5): 1146-1150. |

| [8] | LI Guo-hui,LI Ya-an,YANG Hong. Filtering method of new chaotic system based on EKF [J]. Systems Engineering and Electronics, 2013, 35(9): 1830-1835. |

| [9] |

SUN Jian-zhong, ZUO Hong-fu.

Bayesian method for system real-time performance reliability assessment [J]. Journal of Systems Engineering and Electronics, 2013, 35(8): 1790-1796. |

| [10] | YU Hong-bo, WANG Guo-hong, Sun Yun, Cao Qian. A particle filtering algorithm of state estimation on fusion of UKF and EKF [J]. Journal of Systems Engineering and Electronics, 2013, 35(7): 1375-1379. |

| [11] | XU Yan-ke, LIANG Xiao-geng , JIA Xiao-hong. Adaptive maneuvering target state estimation algorithm based on ANFIS [J]. Journal of Systems Engineering and Electronics, 2013, 35(2): 250-255. |

| [12] | ZHAO Lin, SU Zhong-hua, HAO Yong, WU Xian-de. Algorithm for agile satellite attitude estimation based on dual quaternion and improved EKF [J]. Systems Engineering and Electronics, 2013, 35(12): 2552-2558. |

| [13] | HAO Yong, YAN Xin, SU Zhong-hua. Biased momentum satellite attitude tracking control based on sliding mode observer [J]. Journal of Systems Engineering and Electronics, 2012, 34(11): 2323-2328. |

| [14] | DONG Feiyao, LEI Humin, SHAO Lei, ZHANG Jinpeng. Design of control system for missile longitudinal plane based on extended state observer [J]. Journal of Systems Engineering and Electronics, 2012, 34(1): 125-128. |

| [15] | MU Jing, CAI Yuan-li. Iterated cubature Kalman filter and its application [J]. Journal of Systems Engineering and Electronics, 2011, 33(7): 1454-1457. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||