Systems Engineering and Electronics ›› 2022, Vol. 44 ›› Issue (2): 410-419.doi: 10.12305/j.issn.1001-506X.2022.02.07

• Electronic Technology • Previous Articles Next Articles

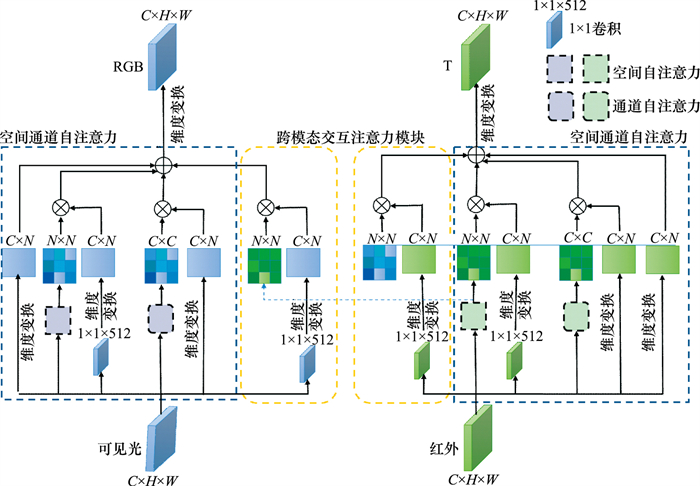

Target tracking network based on dual-modal interactive fusion under attention mechanism

Yunxiang YAO, Ying CHEN*

- College of Computer Internet of Things, Jiangnan University, Wuxi 214122, China

-

Received:2021-01-28Online:2022-02-18Published:2022-02-24 -

Contact:Ying CHEN

CLC Number:

Cite this article

Yunxiang YAO, Ying CHEN. Target tracking network based on dual-modal interactive fusion under attention mechanism[J]. Systems Engineering and Electronics, 2022, 44(2): 410-419.

share this article

Table 1

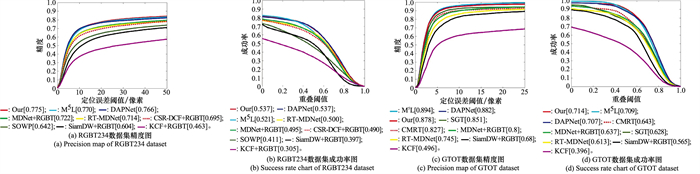

Comparison results of PR/SR scores of different trackers under different challenges on RGBT234"

| 挑战 属性 | MDNet+RGB-T | RT-MDNet+RGB-T | CFNet+RGB-T | CMRT | SiamDW+RGB-T | DAPNet | M5L | Our | |||||||||||||||

| PR | SR | PR | SR | PR | SR | PR | SR | PR | SR | PR | SR | PR | SR | PR | SR | ||||||||

| BC | 0.644 | 0.432 | 0.725 | 0.455 | 0.463 | 0.308 | 0.631 | 0.398 | 0.519 | 0.323 | 0.717 | 0.484 | 0.766 | 0.498 | 0.753 | 0.483 | |||||||

| CM | 0.640 | 0.454 | 0.644 | 0.455 | 0.417 | 0.318 | 0.629 | 0.447 | 0.562 | 0.382 | 0.668 | 0.474 | 0.716 | 0.500 | 0.711 | 0.498 | |||||||

| DEF | 0.668 | 0.473 | 0.670 | 0.466 | 0.523 | 0.367 | 0.667 | 0.473 | 0.558 | 0.390 | 0.717 | 0.578 | 0.727 | 0.500 | 0.723 | 0.507 | |||||||

| FM | 0.586 | 0.363 | 0.637 | 0.387 | 0.454 | 0.299 | 0.613 | 0.384 | 0.597 | 0.365 | 0.670 | 0.443 | 0.659 | 0.420 | 0.740 | 0.467 | |||||||

| HO | 0.619 | 0.421 | 0.618 | 0.404 | 0.417 | 0.290 | 0.563 | 0.377 | 0.520 | 0.337 | 0.660 | 0.444 | 0.662 | 0.457 | 0.682 | 0.456 | |||||||

| LI | 0.670 | 0.455 | 0.737 | 0.474 | 0.523 | 0.369 | 0.742 | 0.498 | 0.600 | 0.399 | 0.775 | 0.530 | 0.761 | 0.495 | 0.766 | 0.576 | |||||||

| LR | 0.759 | 0.493 | 0.760 | 0.483 | 0.551 | 0.365 | 0.687 | 0.420 | 0.605 | 0.370 | 0.750 | 0.510 | 0.762 | 0.496 | 0.784 | 0.513 | |||||||

| MB | 0.654 | 0.463 | 0.612 | 0.429 | 0.357 | 0.271 | 0.600 | 0.427 | 0.494 | 0.340 | 0.653 | 0.467 | 0.670 | 0.472 | 0.709 | 0.505 | |||||||

| NO | 0.862 | 0.611 | 0.894 | 0.586 | 0.764 | 0.563 | 0.895 | 0.616 | 0.783 | 0.534 | 0.900 | 0.644 | 0.904 | 0.619 | 0.870 | 0.639 | |||||||

| PO | 0.761 | 0.518 | 0.780 | 0.517 | 0.597 | 0.417 | 0.777 | 0.536 | 0.608 | 0.396 | 0.817 | 0.544 | 0.821 | 0.574 | 0.826 | 0.572 | |||||||

| SV | 0.735 | 0.505 | 0.735 | 0.482 | 0.596 | 0.433 | 0.710 | 0.493 | 0.609 | 0.405 | 0.772 | 0.513 | 0.780 | 0.542 | 0.773 | 0.545 | |||||||

| TC | 0.756 | 0.517 | 0.786 | 0.513 | 0.457 | 0.327 | 0.675 | 0.443 | 0.569 | 0.368 | 0.768 | 0.538 | 0.781 | 0.543 | 0.748 | 0.536 | |||||||

| All | 0.722 | 0.495 | 0.734 | 0.483 | 0.551 | 0.390 | 0.711 | 0.486 | 0.604 | 0.397 | 0.766 | 0.537 | 0.770 | 0.521 | 0.775 | 0.537 | |||||||

| 1 | 谢瑜, 陈莹. 通道裁剪下的多特征组合目标跟踪算法[J]. 系统工程与电子技术, 2020, 42 (4): 764- 772. |

| XIE Y , CHEN Y . Multi-feature combined target tracking algorithm based on channel clipping[J]. Systems Engineering and Electronics, 2020, 42 (4): 764- 772. | |

| 2 | ZHU Y, MOTTAGHI R, KOLVE E, et al. Target-driven visual navigation in indoor scenes using deep reinforcement learning[C]// Proc. of the IEEE International Conference on Robotics and Automation, 2017: 3357-3364. |

| 3 |

YU H F , LI G , ZHANG W Z , et al. The unmanned aerial vehicle benchmark: object detection, tracking and baseline[J]. International Journal of Computer Vision, 2020, 128 (5): 1141- 1159.

doi: 10.1007/s11263-019-01266-1 |

| 4 | DUAN X, XIE S S, MENG Y Z, et al. Brain computer integration controlled unmanned vehicle for target reconnaissance[C]//Proc. of the IEEE International Conference on Unmanned Systems, 2019: 35-39. |

| 5 | 张开华, 樊佳庆, 刘青山. 视觉目标跟踪十年研究进展[J]. 计算机科学, 2021, 48 (3): 40- 49. |

| ZHANG K H , FAN J Q , LIU Q S . Advances on visual object tracking in past decade[J]. Computer Science, 2021, 48 (3): 40- 49. | |

| 6 | 黄月平, 李小锋, 杨小冈, 等. 基于相关滤波的视觉目标跟踪算法新进展[J]. 系统工程与电子技术, 2021, 43 (8): 2051- 2065. |

| HUANG Y P , LI X F , YANG X G , et al. New development of visual object tracking algorithm based on correlation filtering[J]. Systems Engineering and Electronics, 2021, 43 (8): 2051- 2065. | |

| 7 | CHEN Z C, ZHONG B H, LI G, et al. Siamese box adaptive network for visual tracking[C]//Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020: 6668-6677. |

| 8 | WU Y H, BLASCH E, CHEN G, et al. Multiple source data fusion via sparse representation for robust visual tracking[C]//Proc. of the 14th International Conference on Information Fusion, 2011. |

| 9 |

LIU H Y , SUN F F . Fusion tracking in color and infrared images using joint sparse representation[J]. Science China Information Sciences, 2012, 55 (3): 590- 599.

doi: 10.1007/s11432-011-4536-9 |

| 10 | LI C, ZHAO N N, LU Y F, et al. Weighted sparse representation regularized graph learning for RGB-T object tracking[C]// Proc. of the 25th ACM International Conference on Multimedia, 2017: 1856-1864. |

| 11 | LI C, ZHU C, HUANG Y P, et al. Cross-modal ranking with soft consistency and noisy labels for robust RGB-T tracking[C]// Proc. of the European Conference on Computer Vision, 2018: 808-823. |

| 12 | ZHANG X H, ZHANG X F, DU X, et al. Learning multi-domain convolutional network for RGB-T visual tracking[C]//Proc. of the 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics, 2018. |

| 13 | NAM H, HAN B B. Learning multi-domain convolutional neural networks for visual tracking[C]//Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 4293-4302. |

| 14 |

LI C , CHENG H Y , HU S , et al. Learning collaborative sparse representation for grayscale-thermal tracking[J]. IEEE Trans.on Image Processing, 2016, 25 (12): 5743- 5756.

doi: 10.1109/TIP.2016.2614135 |

| 15 | LAN X X, YE M, ZHANG S H, et al. Robust collaborative discriminative learning for RGB-infrared tracking[C]//Proc. of the AAAI Conference on Artificial Intelligence New Orleans, 2018. |

| 16 | ZHU Y F , LI C D , TANG J , et al. Quality-aware feature aggregation network for robust RGB-T tracking[J]. IEEE Trans.on Intelligent Vehicles, 2020, 6 (1): 121- 130. |

| 17 |

LI C P , LIANG X , LU Y H , et al. RGB-T object tracking: benchmark and baseline[J]. Pattern Recognition, 2019, 96, 106977.

doi: 10.1016/j.patcog.2019.106977 |

| 18 | SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]//Proc. of the 3rd International Conference on Learning Representations, 2015. |

| 19 |

LI C P , WU X , ZHAO N S , et al. Fusing two-stream convolutional neural networks for RGB-T object tracking[J]. Neurocomputing, 2018, 281, 78- 85.

doi: 10.1016/j.neucom.2017.11.068 |

| 20 |

ZHENG Q , CHEN Y S . Feature pyramid of bi-directional stepped concatenation for small object detection[J]. Multimedia Tools and Applications, 2021, 38 (4): 314- 322.

doi: 10.1007/s11042-021-10718-1?utm_source=xmol |

| 21 | CHEN L C , PAPANDREOU G , KOKKINOS I , et al. Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs[J]. IEEE Trans.on Pattern Analysis and Machine Intelligence, 2017, 40 (4): 834- 848. |

| 22 | HE K, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]//Proc. of the IEEE International Conference on Computer Vision, 2017: 2961-2969. |

| 23 | FU J, LIU J, TIAN H F, et al. Dual attention network for scene segmentation[C]//Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 2019: 3146-3154. |

| 24 | JUNG I, SON J, BAEK M, et al. Real-time mdnet[C]//Proc. of the European Conference on Computer Vision, 2018: 83-98. |

| 25 | GIRSHICK R. Fast R-CNN[C]//Proc. of the IEEE International Conference on Computer Vision, 2015: 1440-1448. |

| 26 | LUKEZIC A, VOJIR T, CEHOVIN Z L, et al. Discriminative correlation filter with channel and spatial reliability[C]//Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 2017: 6309-6318. |

| 27 | HENRIQUES J F , CASEIRO R , MARTINS P , et al. High-speed tracking with kernelized correlation filters[J]. IEEE Trans.on Pattern Analysis and Machine Intelligence, 2014, 37 (3): 583- 596. |

| 28 | ZHU Y Y, LI C, LUO B F, et al. Dense feature aggregation and pruning for RGB-T tracking[C]//Proc. of the 27th ACM International Conference on Multimedia, 2019: 465-472. |

| 29 | ZHANG Z, PENG H. Deeper and wider siamese networks for real-time visual tracking[C]//Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 2019: 4591-4600. |

| 30 | TU Z, LIN C, LI C, et al. M5L: multi-modal multi-margin metric learning for RGB-T tracking[EB/OL]. [2021-03-24]. https://arxiv.org/abs/2003.07650v1,2003.07650,2020. |

| [1] | Xiao HAN, Shiwen CHEN, Meng CHEN, Jincheng YANG. Open-set recognition of LPI radar signal based on reciprocal point learning [J]. Systems Engineering and Electronics, 2022, 44(9): 2752-2759. |

| [2] | Limin ZHANG, Kaiwen TAN, Wenjun YAN, Yuyuan ZHANG. Radar emitter recognition based on multi-level jumper residual network [J]. Systems Engineering and Electronics, 2022, 44(7): 2148-2156. |

| [3] | Guodong JIN, Yuanliang XUE, Lining TAN, Jiankun XU. Advances in object tracking algorithm based on siamese network [J]. Systems Engineering and Electronics, 2022, 44(6): 1805-1822. |

| [4] | Xiaofeng ZHAO, Yebin XU, Fei WU, Jiahui NIU, Wei CAI, Zhili ZHANG. Ground infrared target detection method based on global sensing mechanism [J]. Systems Engineering and Electronics, 2022, 44(5): 1461-1467. |

| [5] | Hong ZOU, Chenyang BAI, Peng HE, Yaping CUI, Ruyan WANG, Dapeng WU. Edge service placement strategy based on distributed deep learning [J]. Systems Engineering and Electronics, 2022, 44(5): 1728-1737. |

| [6] | Dong CHEN, Yanwei JU. Ship object detection SAR images based on semantic segmentation [J]. Systems Engineering and Electronics, 2022, 44(4): 1195-1201. |

| [7] | Jingming SUN, Shengkang YU, Jun SUN. Pose sensitivity analysis of HRRP recognition based on deep learning [J]. Systems Engineering and Electronics, 2022, 44(3): 802-807. |

| [8] | Yongxing GAO, Xudong WANG, Ling WANG, Daiyin ZHU, Jun GUO, Fanwang MENG. Weather signal detection for dual polarization weather radar based on RCNN [J]. Systems Engineering and Electronics, 2022, 44(11): 3380-3387. |

| [9] | Yiheng ZHOU, Jun YANG, Saiqiang XIA, Mingjiu LYU. Estimation method of micro-motion parameters for rotor targets under flashing [J]. Systems Engineering and Electronics, 2022, 44(1): 54-63. |

| [10] | Rong FU, Tianyao HUANG, Yimin LIU. DNN based 1-bit block sparse recovery in frequency agile coherent radar [J]. Systems Engineering and Electronics, 2022, 44(1): 70-75. |

| [11] | Yuyuan ZHANG, Limin ZHANG, Wenjun YAN. SFBC-OFDM recognition method based on cross-correlation feature map and dilated dense convolutional neural networks [J]. Systems Engineering and Electronics, 2021, 43(9): 2657-2664. |

| [12] | Caiyun WANG, Yangyu LI, Xiaofei LI, Jianing WANG, Wenyi WEI. Aerial image super-resolution restruction based on sparsity and deep learning [J]. Systems Engineering and Electronics, 2021, 43(8): 2045-2050. |

| [13] | Yueping HUANG, Xiaofeng LI, Xiaogang YANG, Naixin QI, Ruitao LU, Shengxiu ZHANG. Advances in visual object tracking algorithm based on correlation filter [J]. Systems Engineering and Electronics, 2021, 43(8): 2051-2065. |

| [14] | Bin WANG, Guoyu WANG. Instantaneous coastline automatic extraction algorithm for SAR images based on improved deep learning network [J]. Systems Engineering and Electronics, 2021, 43(8): 2108-2115. |

| [15] | Chunsi XIE, Zhiying LIU, Yu SANG. Target recognition model of ship-to-land missile based on feature matching [J]. Systems Engineering and Electronics, 2021, 43(8): 2244-2253. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||